Data Engineering Consulting

Build scalable AI/ML platforms to discover better insights with diversified data engineering consulting services

Talk to Our Data Engineering Consultant

Data engineering consulting helps you assess your data setup, build pipelines and governance, migrate to cloud, and run operations - so your team stops spending over 70% of their time on data prep.

Our Data Engineering Consulting ensures advising, building, and operating. You get a platform that works in production and stays reliable.

We map your target platform, pick tools, and set a clear migration plan you can follow.

We implement data lakes, warehouses, and lakehouses with automated pipelines and testing so you get repeatable results.

We set catalog rules, lineage and quality checks so you trust your data and meet audits.

We ingest data from APIs, files and legacy systems and transform it for analytics.

We build pipelines with alerts and retries so data arrives when you expect it.

We initiate with deploying and monitoring models, and automate retraining so models stay accurate.

We then design schemas and further fine tune performance, so analysts get answers fast.

Then, we store raw and curated data together and proceed with managing schema evolution for analytics and ML.

We create a virtual layer so you can query data across systems without copying everything.

We help teams own their data while keeping centralized guardrails for quality and security.

We also scale processing and storage, so your large datasets do not slow your analytics in anyway.

We add CI/CD, testing and monitoring to your pipelines so you ship safely and fast.

Book a 30-minute workshop to map your current data gaps and get a clear, prioritized roadmap for next steps.

Schedule 30‑min call

Snowflake Cloud Data Platform

We implement Snowflake Data Cloud, so you separate storage and compute and pay only for what you use.

Sancus (AI-powered data quality)

We apply AI to clean and enrich data, so your reports and models use accurate inputs.

T-Ingestor (AI-powered data management)

We onboard sources fast with a metadata-driven ingestor that keeps catalogs current.

T-Voyager

We deliver visualization tools that help your teams spot trends and act on them.

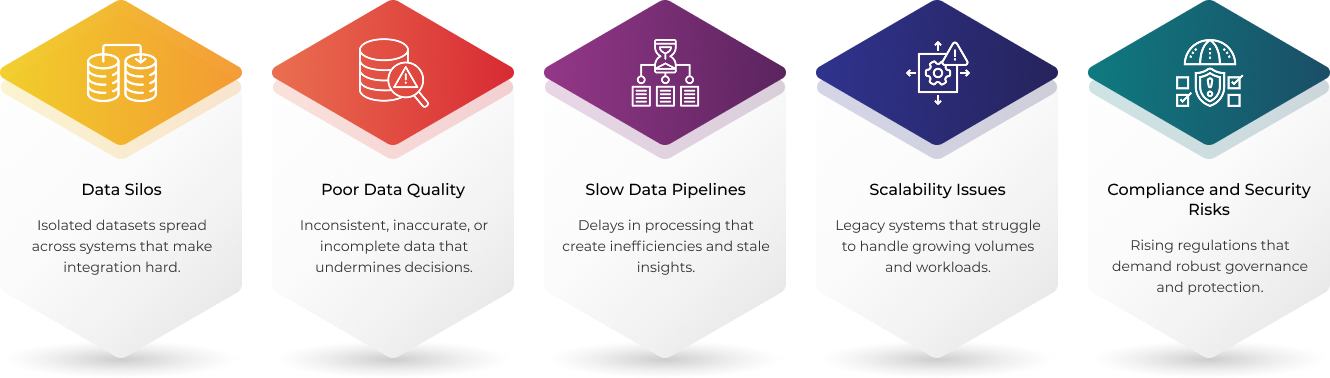

Get faster insights when data flows reliably into analytics and ML systems.

Remove silos so your teams can find and use trusted datasets fast.

Automate ingestion, transformation and delivery to cut manual work.

Protect sensitive data with controls that stand up to audits.

D.R.E.A.M Framework

We combine practical engineering, business context and reusable accelerators to deliver measurable outcomes.

We reuse proven components, so you get value faster and avoid unnecessary rework.

We apply tested delivery patterns focused on reuse, automation and operational maturity.

We tailor approaches for automotive, retail, telecom, finance, healthcare and manufacturing.

Try a guided demo of our ingestion and quality accelerators to see how they cut onboarding time for sources.

Request Free demoAccelerate generation and adoption of actionable insights through mature data platforms leveraging our data engineering solutions and services

We run automated checks, remove duplicates, and fix formats so teams get clean data fast. We add simple monitors and periodic audits so errors show up quickly and you can trust reports.

We prepare labeled, versioned datasets and build repeatable pipelines for features and training. That makes model runs reproducible and speeds deployment into production.

Files arrive in different shapes and at different times. We standardize formats, automate transforms, and set clear SLAs so data arrives consistent and on schedule.

We assess your stack, pick priority workloads, and migrate in phases. We test performance and governance after each phase so production stays stable.

Yes. We add governance, encryption, anonymization, and strict access controls. We keep lineage and audit logs so you can prove compliance quickly.

DataOps applies CI/CD, tests, and monitoring to data pipelines. It cuts manual fixes, speeds delivery, and keeps downstream teams working with trusted inputs.

Yes. We provide round-the-clock DBA and platform support, plus high availability and disaster recovery, so you minimize downtime and risk.

We apply role-based access, strong encryption in transit and at rest, and tokenization or anonymization where needed. We log access and alert on odd behavior.

We match tools to your goals, team skills, and budget. We run quick pilots when useful and pick the stack that lowers risk and speeds time-to-value.

We automate ingestion and transforms, centralize trusted datasets, and standardize names and lineage. Analysts spend more time on insight and less on cleaning.

Looking for Digital Transformation?

INDIANA:

201 N Illinois Street,

16th Floor - South Tower

Indianapolis, IN 46204

United States

ILLINOIS:

405 W

Superior St, 707

Chicago, Illinois 60654

United States

Email us for Business

Call Us

AUSTRALIA:

Unit 605,

354 Church Street

Parramatta, Sydney, NSW 2150

Australia

Email us for Business:

Call Us

Indore Office:

NRK Business Park,

901 A, PU4, Scheme No. 54, Vijay Nagar,

Indore,

Madhya Pradesh 452010,

India

Pune Office:

Nyati Empress,

Awfis, 9th Floor, Off Viman Nagar Road,

Viman Nagar,

Pune, Maharashtra 411014,

India

Hyderabad Office:

N Heights,

Level 6, Plot No. 38, Phase 2, HITEC City,

Hyderabad, Telangana

500081,

India

Email us for Career:

Email us for Business:

Call Us