How Databricks Integration with Power BI Unlocks Enterprise Insights

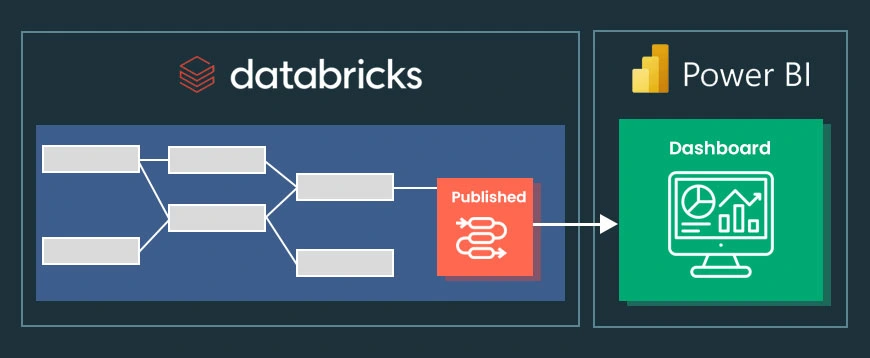

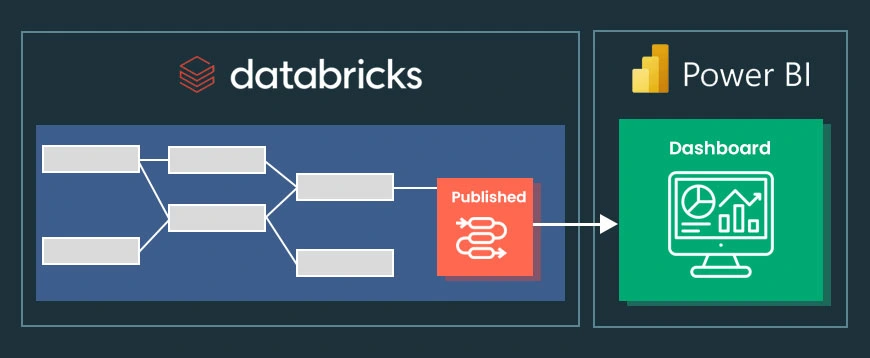

This guide walks you through integrating Power BI with Databricks in 2026. You’ll see the real benefits of this pairing, what you need before starting, and exactly how to connect these platforms step by step. You’ll learn to set up your environment, load your data, create visuals that tell a story, and keep everything accurate so your company can turn raw numbers into decisions that matter.

Businesses today need strong tools to make sense of their data. Power BI stands out as a top business intelligence platform, while Databricks offers robust unified analytics. When you bring them together, something special happens. You move past boring static reports into a world where your analytics are dynamic, can scale with your needs, and help teams work better together. Understanding the databricks integration with power bi gives your organization the foundation it needs to make smarter choices faster.

Businesses today need strong tools to make sense of their data. Power BI stands out as a top business intelligence platform, while Databricks offers robust unified analytics. When you bring them together, something special happens. You move past boring static reports into a world where your analytics are dynamic, can scale with your needs, and help teams work better together. Understanding the databricks integration with power bi gives your organization the foundation it needs to make smarter choices faster.

What Are the Advantages of Connecting Power BI with Databricks?

When you connect these two platforms, you unlock benefits that can really change how your organization handles data. The way Databricks processes information combines beautifully with how Power BI shows that information visually. Together, they create a complete solution that works from start to finish.

- Unified Data Processing and Visualization: You get Databricks’ serious data processing muscle working alongside Power BI’s easy-to-use visual tools. This means your whole analytics process flows smoothly, from the moment raw data comes in until you’re looking at interactive dashboards. No need to juggle different systems that don’t talk to each other.

- Real-time Data Exploration: Databricks can process data as it happens. So Power BI can show you the freshest information possible. This matters a lot when you need quick answers, like watching how operations are running right now or tracking changes in your market.

- Scalability and Performance: Databricks runs on Apache Spark, which means it scales up easily and handles data fast. Power BI can work with massive amounts of data without slowing down. Traditional BI setups often hit walls here, but this combination doesn’t.

- Advanced Data Transformations: Power BI’s transformation features work hand in hand with Databricks’ sophisticated data handling. You can prepare your data in advanced ways while keeping the process smooth. Your data ends up clean, trustworthy, and ready to analyze. Looking at different Databricks use cases shows you just how flexible it can be.

- Enhanced Collaboration: Teams can share work more easily when Databricks notebooks and Power BI reports work together. This breaks down the walls between your data engineers, data scientists, and business analysts. Everyone can share insights in one collaborative space.

- Enhanced Predictive Analytics: Databricks has machine learning built in, and it fits right into Power BI. This boosts your predictive analytics capabilities. You can build smarter data models and see future trends directly in your dashboards.

Transform your complex company data into clear, actionable visual reports and dashboards.

Build Your DashboardWhat Do You Need Before You Start the Integration?

Before you jump into Power BI Databricks integration, make sure you’ve got what you need ready. A good setup at the start makes everything go smoother later.

You’ll need Power BI Desktop installed on your computer. If you don’t have it yet, grab it from Microsoft’s site. This is what you’ll use to build reports and data models.

You also need access to a Databricks workspace. This could be on AWS, Azure, or GCP. If you’re just learning, Databricks Community Edition gives you a free option to experiment with. But you need an account either way.

How Do You Set Up a Databricks Environment for Power BI?

If you’re new to Databricks, your first step is getting an environment ready. The Community Edition works great for learning because it’s free and runs in the cloud. Setting up Azure Databricks Power BI setup correctly matters a lot for everything that comes after.

Step-by-Step Environment Configuration:

Start by creating a cluster in your Databricks workspace. Go to the “Clusters” tab and make a new one. This cluster gives you the computing power you need to run data jobs and queries.

Next, head to the “Workspace” tab and create a notebook. Pick the language you prefer. Python, Scala, and SQL all work. You’ll use this notebook to get your data ready.

Now you need some data to work with, so create a Delta table with sample information. Use your notebook to make a database and table. Delta Lake is important here because it helps you ensure high Databricks data quality through features like ACID transactions.

Here’s a simple example using Python to create a Spark DataFrame and save it as a Delta table:

# Sample Python code to create a Delta table

student_data = [(1, “Pubudu”, 10, 10000), (2, “Suranga”, 20, 20000)]

student_schema = [“studentId”, “StudentName”, “DeptNo”, “Salary”]

df = spark.createDataFrame(data=student_data, schema=student_schema)

df.write.format(“delta”).saveAsTable(“landing.Student_Table”)

After creating your table, run a SQL query to check that your data is there and looks right. This verification step helps before you move forward with the databricks integration with power bi.

How Do You Connect Power BI to Your Databricks Workspace?

Once your Databricks environment is ready, you can connect it to Power BI Desktop. The Power BI Databricks connector makes accessing your data straightforward.

Connecting Power BI Desktop:

Open Power BI Desktop and click “Get Data” on the Home tab. Then select “More…” to see all available data sources.

In the Get Data window, search for “Databricks” and select it. Click Connect.

You’ll see a prompt asking for your connection details. Find these in your Databricks cluster’s configuration section under the JDBC/ODBC tab. You need two things: the Server URL (your Databricks workspace URL) and the HTTP Path (the specific path for your cluster or SQL warehouse).

Enter your login credentials to authenticate. After the connection goes through, you can pick which tables or views you want to bring into Power BI. You can also write custom queries if you need specific data for your Databricks Power BI dashboard.

Our certified experts can guide you through every step, from implementation to advanced customization.

Get Expert HelpHow Can You Build Visualizations and Refresh Data?

After loading data into Power BI, you can start building reports and dashboards that people can actually interact with. This is where the databricks integration with power bi really shows its value. You take complex datasets and turn them into visual stories that make sense.

Creating Visualizations:

Switch to the Report view in Power BI. You’ll see all your imported tables and fields from Databricks in the Fields pane. Just drag the fields you want onto your report canvas to create charts, tables, and other visuals.

The process feels natural and lets you build reports quickly. This is one of the big reasons why engaging comprehensive Power BI services and consulting can help your team get up to speed fast.

Refreshing Data:

Business data changes constantly. When your Delta table updates, you need those changes to show up in your Power BI reports. Just click the Refresh button on the Home menu in Power BI Desktop.

For reports you’ve published to the Power BI service, you can set up automatic refreshes on a schedule. Your dashboards will always show the latest information without you lifting a finger. This becomes really important for real-time analytics Power BI Databricks solutions where timing matters.

What Are Some Advanced Connection Options?

The standard connection method works well, but companies often need more advanced setups for security, performance, and automation. Knowing these options helps you get the most from your databricks integration with power bi.

For automated and secure machine-to-machine authentication, you can set up a service principal on Azure Databricks. This is smart for production environments because you’re not relying on individual user credentials when the Power BI service connects.

If you want better performance, consider switching from the default ODBC driver to the ADBC driver. The Arrow-based driver is built for high-speed data transfer between databases and analytics applications. It can make your data load noticeably faster in Power BI.

For pure business intelligence work, connecting Power BI to a Databricks SQL warehouse instead of an all-purpose cluster often makes more sense. SQL warehouses are tuned for SQL queries and work really well with the Power BI with Databricks SQL endpoint. They’re usually more efficient and cost less for BI workloads.

These advanced configurations, including smart Databricks integrations with other tools, are where you really see the benefits of working with a certified Databricks consulting partner.

How Can Beyond Key Help You Succeed?

Handling a large-scale Power BI Databricks integration takes real expertise. Beyond Key is a certified Databricks consulting partner, and we help you deploy, manage, and optimize your entire data ecosystem. We build solutions that fit your specific needs for data engineering, machine learning, and cloud integration. Your infrastructure ends up both powerful and cost-effective. Our team doesn’t just get you set up quickly. We make sure you’re ready for long-term success with ongoing support and knowledge sharing.

Conclusion: Turning Data into Actionable Insights

Integrating Power BI and Databricks is bigger than just a technical connection. It’s a strategic decision that helps your organization turn raw data into a real competitive edge.

Following this guide, you can build an analytics environment that’s robust, scales when you need it to, and helps teams collaborate. As you keep going, think about exploring advanced features like scheduled data refresh, strong governance practices, and optimization techniques.

The path from understanding what is Microsoft Azure Databricks to using natural language queries with tools like AI/BI Genie keeps improving over time. Power BI and Databricks together give you the tools to tell meaningful stories with your data. And those stories drive informed decisions across your whole enterprise.

Frequently Asked Questions

1. What are the main data connectivity modes in the Power BI Databricks connector?

The connector gives you two main options: Import and DirectQuery. Import mode loads data into Power BI’s in-memory engine, which makes your visuals run fast. DirectQuery mode sends queries straight to Databricks in real time. This works well for really large datasets or when you need data that’s current right now. But your dashboard performance can vary based on how complex your queries are and how well your Databricks cluster or SQL warehouse is performing.

2. Can I use Power BI with the free Databricks Community Edition?

Yes, you can connect Power BI to Databricks Community Edition for learning and trying things out. The process is the same as connecting to a regular workspace. But the Community Edition has limits on cluster size, how long it stays running, and what features you get. For serious production work at your company, you need a full Databricks subscription on a cloud provider like Azure, AWS, or GCP. That gives you the scalability, reliability, and complete features you need.

3. How does the Arrow Database Connectivity (ADBC) driver improve performance?

The ADBC driver uses the Apache Arrow format, which stores data in columns in memory and is optimized for analytical queries. When you use the ADBC driver for the databricks integration with power bi, data moves from Databricks to Power BI more efficiently than with a traditional ODBC driver. It cuts down on serialization and deserialization overhead. The result is faster data loading, especially with large datasets, and reports that feel more responsive when you’re building them.

4. What is the difference between connecting to a Databricks cluster versus a SQL warehouse?

An all-purpose cluster handles different kinds of work, including data engineering and data science tasks using notebooks. A Databricks SQL warehouse (it used to be called SQL endpoint) is built specifically for high-performance business intelligence and SQL queries. For a Power BI Databricks integration, connecting to a SQL warehouse usually makes the most sense. It handles multiple users better, responds faster to SQL queries, and can save you money for BI work because it’s designed exactly for that purpose.

5. How do I handle data refresh for a published Power BI report connected to Databricks?

After you publish your report from Power BI Desktop to the Power BI service, you need to set up a data gateway (for most on-premises or VNet-secured sources) and configure credentials for your Databricks data source. Once that’s done, you can schedule automatic refreshes in the dataset settings. Pick how often you want it to refresh, like daily or hourly. This keeps your published Databricks Power BI dashboard and reports updated with the latest data from your Databricks tables. You won’t have to do it manually.

Businesses today need strong tools to make sense of their data.

Businesses today need strong tools to make sense of their data.